Home / Technology / Startup's Efficient AI Chips Challenge Nvidia

Startup's Efficient AI Chips Challenge Nvidia

22 Feb

Summary

- FuriosaAI develops efficient AI inference chips, contrasting with GPU dominance.

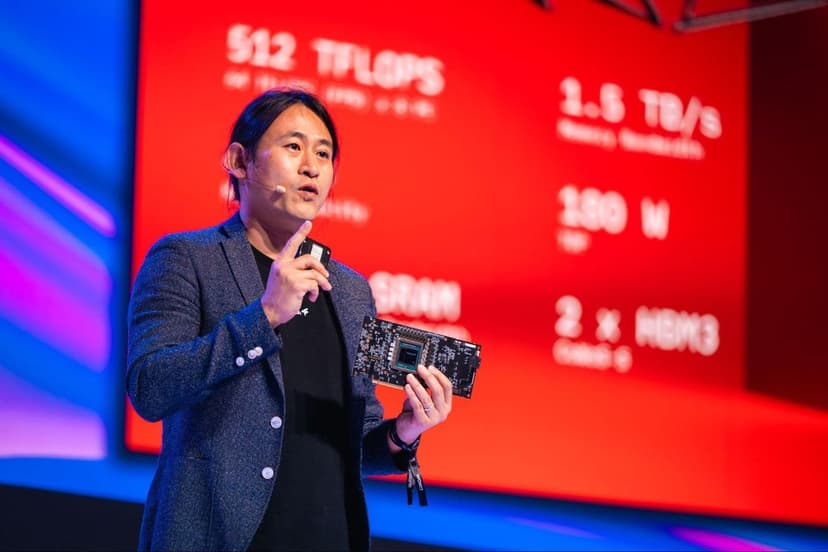

- Their RNGD processor uses Tensor Contraction Processor architecture for high performance.

- The chips offer high performance with significantly lower power consumption than GPUs.

FuriosaAI, a startup from South Korea, is re-engineering AI hardware by developing inference chips that aim for high performance and efficiency, distinct from the GPU-dominated landscape. The company's latest processor, RNGD, features a proprietary Tensor Contraction Processor (TCP) architecture, designed to execute AI's complex multidimensional mathematics natively.

This architectural innovation allows FuriosaAI's chips to offer significant advantages over traditional GPUs. For instance, the RNGD processor can run demanding AI models while consuming substantially less power, operating at 180 watts compared to the 600 watts or more required by GPUs. This efficiency is crucial for addressing the growing energy and infrastructure challenges in data centers.

To overcome Nvidia's established software ecosystem, FuriosaAI has co-designed its hardware and software from the ground up. Their approach integrates seamlessly with standard developer tools like PyTorch and vLLM, avoiding the need to replicate Nvidia's CUDA library. This strategy enables enterprises to access high-performance AI inference without overhauling their existing workflows or investing in extensive new infrastructure.

FuriosaAI is targeting sectors most affected by power and infrastructure costs, including on-premise deployments for regulated industries, enterprise customers focused on total cost of ownership (TCO), regional cloud providers, and telcos with power-constrained edge environments. The company, founded in 2017, has secured strategic partnerships, including with SK Hynix for memory and LG as a key customer, leveraging South Korea's strong semiconductor heritage.

Looking ahead, FuriosaAI anticipates a future data center landscape characterized by heterogeneous compute, where various AI-specific silicon architectures coexist. The company plans to evolve its offerings to meet the demands of hyperscalers and continue pushing the boundaries of AI hardware with a strong emphasis on software development to support new models and deployment tools.