Home / Technology / Beyond Nvidia: OpenAI Taps Cerebras for Lightning Code

Beyond Nvidia: OpenAI Taps Cerebras for Lightning Code

12 Feb

Summary

- OpenAI launches GPT-5.3-Codex-Spark for rapid coding.

- New model uses Cerebras Systems hardware, a shift from Nvidia.

- Codex-Spark offers 15x faster generation speeds.

OpenAI introduced GPT-5.3-Codex-Spark on Thursday, a new AI model engineered for near-instantaneous coding responses. This model represents OpenAI's first major inference partnership outside of its established Nvidia-based infrastructure, instead utilizing hardware from Cerebras Systems, known for its low-latency AI processing.

The collaboration with Cerebras arrives during a complex period for OpenAI, marked by evolving relationships with chip suppliers, internal restructuring, and new ventures. The company emphasizes that GPUs remain foundational for training and broad inference, while Cerebras complements this by excelling in low-latency workflows.

Codex-Spark is purpose-built for real-time coding collaboration, claiming generation speeds 15 times faster than previous iterations, though specific latency metrics were not disclosed. This speed is achieved with acknowledged trade-offs in handling highly complex, multi-step programming challenges compared to the full Codex model.

The model supports a 128,000-token context window and text-only inputs. It is available as a research preview to ChatGPT Pro subscribers via the Codex app, command-line interface, and VS Code extension, with select enterprise partners receiving API access for evaluation.

The underlying architecture leverages Cerebras's Wafer Scale Engine 3, designed to minimize communication overhead in AI workloads. This move underscores the growing importance of inference economics as AI companies scale consumer-facing products and seek to reduce dependence on single suppliers like Nvidia.

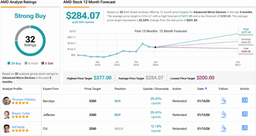

This diversification into alternative chip suppliers, including Cerebras, AMD, and Broadcom, occurs amidst reports of a cooling relationship with Nvidia. OpenAI aims to secure price-performance advantages and operational flexibility by exploring multiple hardware partners.

The launch of Codex-Spark coincides with internal challenges at OpenAI, including the disbandment of safety-focused teams and criticism over introducing advertisements into ChatGPT. The company also secured a Pentagon contract requiring "all lawful uses" of its technology.

OpenAI's broader technical vision for Codex includes a coding assistant that seamlessly blends rapid interactive editing with autonomous background tasks. Codex-Spark lays the groundwork for the interactive component, with future releases expected to support more sophisticated multi-agent coordination.

Codex-Spark operates under separate rate limits during its research preview due to specialized hardware capacity. OpenAI is monitoring usage patterns to determine future scaling strategies for this unique offering.

The announcement enters a competitive landscape of AI-powered developer tools, with rivals like Anthropic and major cloud providers also investing heavily in AI coding capabilities. The rapid adoption of OpenAI's Codex app, with over one million downloads, highlights developer interest in these tools.