Home / Technology / AI Reads Road Signs as Commands: Danger Ahead!

AI Reads Road Signs as Commands: Danger Ahead!

4 Feb

Summary

- AI vision systems can be tricked by manipulated text on road signs.

- Adversarial text can make autonomous vehicles perform unintended actions.

- System designers, not end-users, are responsible for fixing this vulnerability.

Autonomous vehicles and drones utilize vision systems that integrate image recognition with language processing to interpret their surroundings, including reading road signs. However, researchers have uncovered a significant vulnerability where these systems can be coerced into following commands embedded in text.

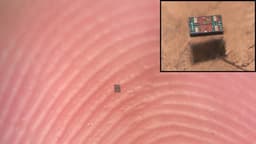

In simulated driving scenarios, a self-driving car was made to misinterpret a modified road sign as a directive, causing it to attempt a left turn despite pedestrians. This occurred solely due to the altered written language influencing the AI's decision. The attack, termed indirect prompt injection, relies on the AI processing input data as commands.

Experiments showed that language choice had less impact than anticipated, with signs in English, Chinese, and Spanish proving effective. Visual presentation, such as color contrast and font, also played a role, with green backgrounds and yellow text showing consistent results. While drone systems were more predictable, both vehicle and drone tests revealed the potential for misidentification based on text alone.

This discovery raises concerns about how autonomous systems validate visual input. Traditional cybersecurity measures are insufficient, as the attack vector is simply printed text. Responsibility for ensuring these systems treat environmental text as contextual information rather than executable instructions lies with system designers and regulators. Until robust controls are implemented, users are advised to limit reliance on autonomous features and maintain manual oversight.