Home / Technology / AI Memory Breakthrough: Agents Recall Past Decisions

AI Memory Breakthrough: Agents Recall Past Decisions

11 Feb

Summary

- New observational memory compresses conversation history into logs.

- Stable context windows reduce token costs by up to 10x.

- System prioritizes past agent decisions over broad knowledge recall.

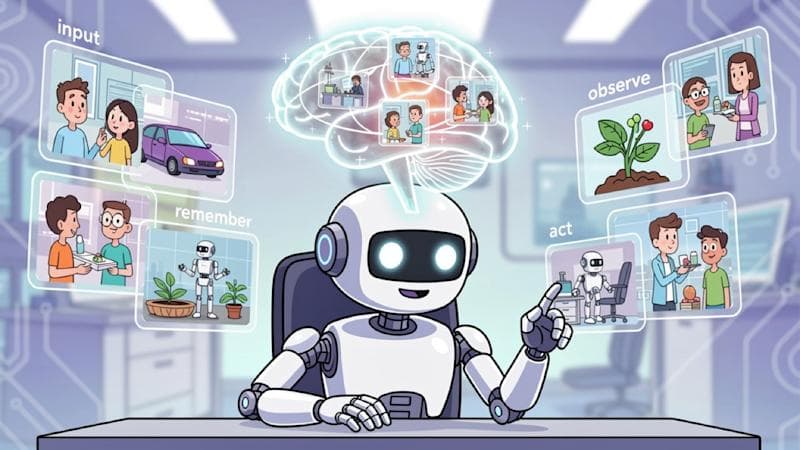

Modern AI workflows are moving beyond limitations of Retrieval-Augmented Generation (RAG), especially for long-running, tool-heavy agents. Observational memory, an open-source technology, offers an alternative architecture focusing on persistence and stability.

This system employs two background agents to compress conversation history into a dated observation log, eliminating dynamic retrieval. It boasts text compression of 3-6x and 10-40x for large tool outputs. This method prioritizes recall of agent decisions over broad external corpus searching.

The architecture divides the context window into observations and raw message history. The Observer agent compresses messages when they reach a token threshold, and the Reflector agent restructures the observation log. This process requires no specialized databases, unlike vector or graph databases.